Patch your Trash

A patch-based implementation of Fully Convoluted Neural Network (FCN) for the detection of soft drink bottles in the trash for easy recycling

Python, TensorFlow , Keras, Computer Vision, Object Detection, Fully Convolutional Networks, PatchingThe dataset consists of 200 RGB images, where each image contains 8 containers and is accompanied by a mask which contains a semantic segmentation of the image. The goal is to identify, implement and train a Neural Network architecture which is able to detect each container and output its orientation, spatial boundaries and label.

labels in question are Cola bottle, Fanta bottle, Cherry Coke bottle, Coke Zero bottle, Mtn Dew bottle, Fanta can, Cola can. The dataset consists of 200 JPEG format images and their correspoding masked EXR images. The figure below shows a JPEG image corresponding to its masked EXR imags.

In order to efficiently load and process the given data images in the FCNN, I have utilized a patch-based approach. The proposed solution mitigated the GPU usage and used less RAM as well. The proposed solution was able to achieve upto 98.78% accuracy in predicting the class labels for the test set.

Since the images in the dataset were of a significant size, I implemented a patch-based approach to efficiently store and process the images. First, the dataset is divided into training and test sets, with 80-20% ratio for training and testing. The images in the divided datasets are then broken into 26 patches of equal size (224x224) each. These patched images are used for training the model, and testing as well. The patches are divided such that there is no miscalculation on the receptive field of the overlapping patches. This is called addressing boundary effects. Refer to my GitHub page for more details about the Model and the methodology.

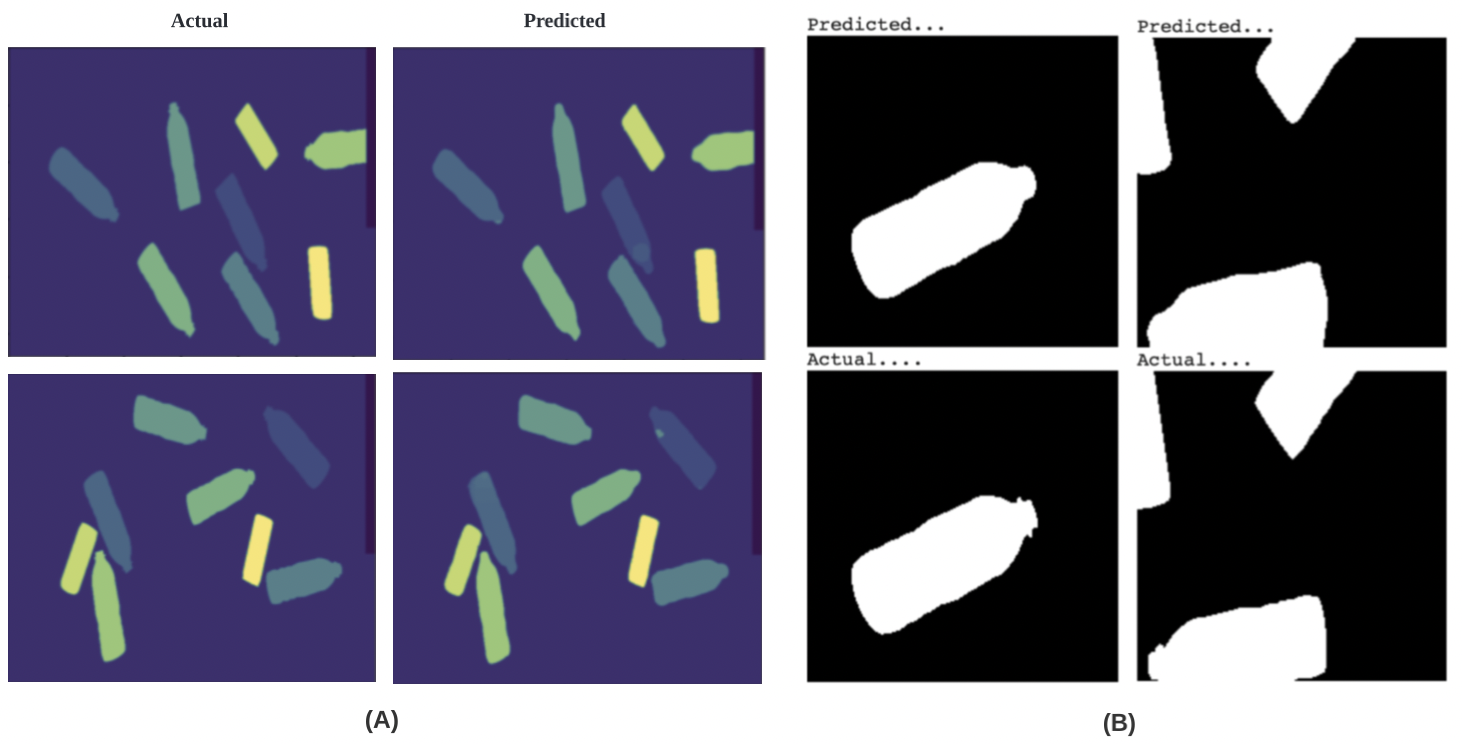

The above figure (A) shows the several final (predicted + reconstructed) output images juxtaposed with their actual (ground truth) images, to showcase the model performance. The above figure (B) shows the patched (predicted) output image juxtaposed with its actual patched (ground truth) image.